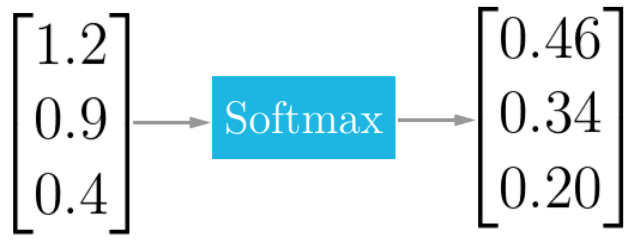

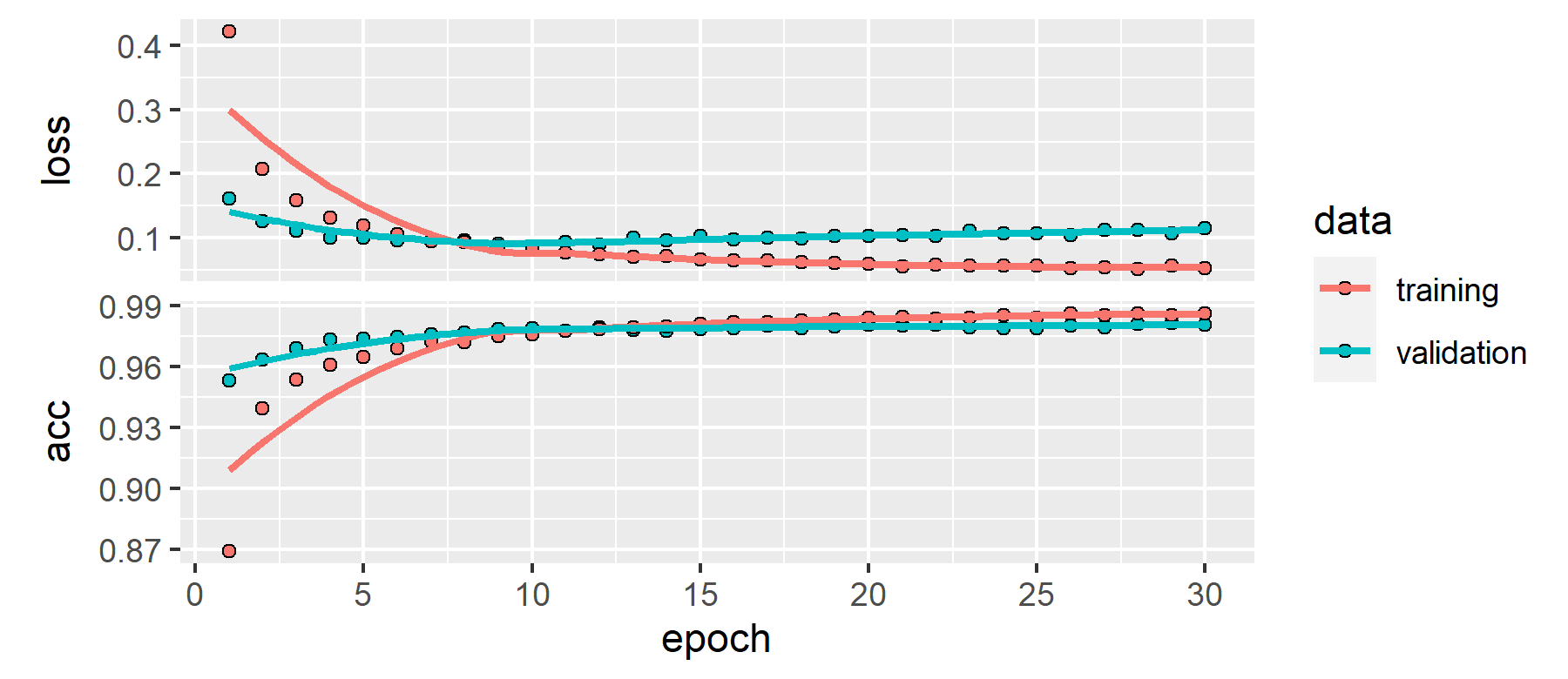

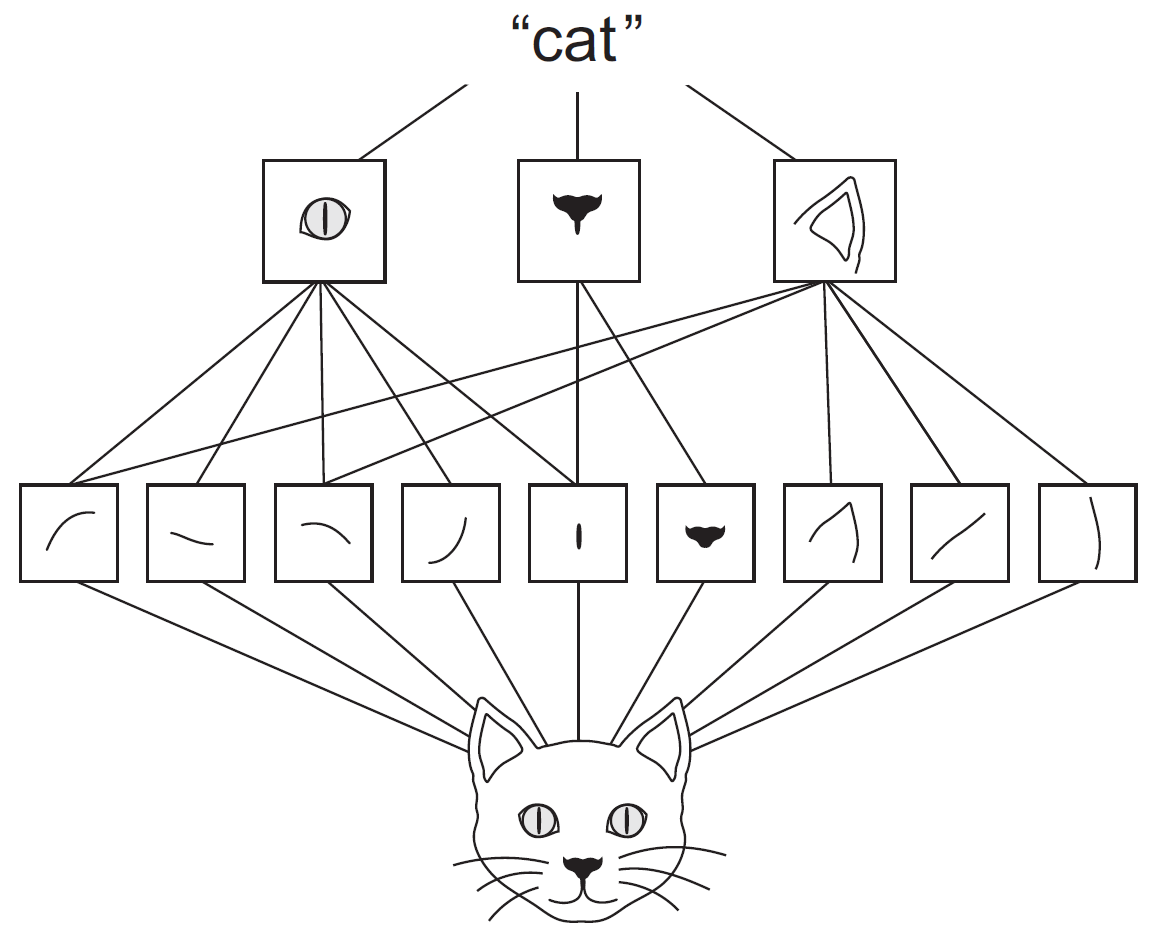

class: center, inverse <br><br><br> # Intro to Deep Learning ## (and Computer Vision) <br><br> ## Yu-Kai Lin ### Presented at CIS 9390<br> April 15, 2022 --- ## What I want to share ... * What deep learning is * What it can achieve * How it works * Deep learning frameworks * A concrete example .footnote[ <hr> [**Acknowledgements**] The materials in the following slides are based on the source(s) below: * [Deep Learning with R](https://www.amazon.com/Deep-Learning-R-Francois-Chollet/dp/161729554X) by Francois Chollet and J.J. Allaire * [R interface to Keras](https://keras.rstudio.com/) ] --- ## Prerequisites ```r install.packages("tidyverse") install.packages("keras") library(tidyverse) library(keras) # Install keras backend, including Miniconda and relevant python packages install_keras() # will take a while # Once the installation is completed, RStudio may restart the R session library(tidyverse) library(keras) ``` --- ## AI, ML, and DL .center[<img src="https://dl.dropboxusercontent.com/s/l0j66ozmgl06cli/AI-ML-DL.PNG" alt="AI, ML, and DL" height="450">] .footnote[ <hr> Source: http://fortune.com/ai-artificial-intelligence-deep-machine-learning/ ] --- ## Classical AI vs. ML .blue[**A machine-learning system is _trained_ rather than explicitly programmed.**] What machine learning does is essentially searching for useful representations of some input data, within a predefined space of possibilities, using guidance from a feedback signal. .center[<img src="https://dl.dropboxusercontent.com/s/h3qihmsspoeq2lx/Classical%20AI%20vs.%20ML.svg" alt="Classical AI vs. ML" height="280">] --- ## Classical ML vs DL .blue[**Rules are essentially a representation of the data.**] The _deep_ in _deep learning_ isn't a reference to any kind of deeper understanding achieved by the approach; rather, it stands for this idea of successive layers of representations. In other words, DL is a form of progressive _data distillation_. .center[<img src="https://dl.dropboxusercontent.com/s/z9gnahn01m7qw64/Classical%20ML%20vs%20DL.svg" alt="Classical ML vs. DL" height="300">] --- ## Neural networks (NNs) In deep learning, these layered representations are (almost always) learned via models called _neural networks_, structured in literal layers stacked on top of each other. .center[<img src="https://dl.dropboxusercontent.com/s/ygcmnzkm27tdrpc/DL%20layers%20of%20representations.png" alt="DL Layered Representations" height="400">] --- ## Principles in building NNs (1) .blue[**1. A neural network is parameterized by its weights**] .center[<img src="https://dl.dropboxusercontent.com/s/ulrbc5dxce9px8d/NN%20weights.PNG" alt="NN weights" height="400">] --- ## Principles in building NNs (2) .blue[**2. A loss function measures the quality of the network's output**] .center[<img src="https://dl.dropboxusercontent.com/s/lq3bhf8r6s9a0f8/NN%20loss%20function.PNG" alt="NN loss function" height="450">] --- ## Principles in building NNs (3) .blue[**3. The loss score is used as a feedback signal to adjust the weights**] .center[<img src="https://dl.dropboxusercontent.com/s/g3v86085i7qujgi/NN%20loss%20optimizer.PNG" alt="NN loss optimizer" height="450">] --- ### Why DL is suddenly becoming so popular? * NNs were already well understood in the 1980s * Kernal methods (e.g., support vector machines) and tree-based methods (e.g., random forests) were preferred techniques in ML in 1990s and 2000s * We return to NNs around 2010 because of four technical advances: * Hardware: GPU, [TPU](https://cloud.google.com/tpu/), [CUDA](https://developer.nvidia.com/about-cuda), [BLAS ](https://en.wikipedia.org/wiki/Basic_Linear_Algebra_Subprograms) * Datasets and benchmarks: [ImageNet](http://www.image-net.org/) (1.4 million images; 1,000 image categories) * Algorithmic advance: * Better activation functions for neural layers * Better weight-initialization schemes * Better optimization schemes * High level **DL frameworks** for Python and R users --- ## Self-driving cars .center[<img src="https://dl.dropboxusercontent.com/s/zvtfb5ti2f2p6wn/Waymo%20fully%20self-driving%20cars.PNG" alt="Waymo's fully self-driving cars" height="400">] .footnote[ <hr> Source: YouTube - [Waymo's fully self-driving cars are here](https://www.youtube.com/watch?v=aaOB-ErYq6Y) ] --- ## Semantic segmentation .center[<img src="https://dl.dropboxusercontent.com/s/3ycbb21ho63vge2/Semantic%20Segmentation%20with%20Deep%20Learning.png" alt="Semantic Segmentation">] .footnote[ <hr> * Source: http://blog.qure.ai/notes/semantic-segmentation-deep-learning-review * Extra: https://towardsdatascience.com/background-removal-with-deep-learning-c4f2104b3157 ] --- ## Affect detection .center[<img src="https://dl.dropboxusercontent.com/s/hi047dj9v0v2oeo/Facial%20Expressions.jpg" alt="Facial Expressions" height="400">] .footnote[ <hr> * Source: http://www.personal.psu.edu/afr3/blogs/siowfa12/2012/10/facial-expressions.html * Extra: https://www.affectiva.com/ ] --- ## Face Generator .center[ <video height="350" controls> <source src="https://dl.dropboxusercontent.com/s/18akb5muq1hujcu/nvidia-gan-face.mp4#t=30,59" type="video/mp4"> </video> ] .footnote[ <hr> * Source: YouTube - [NVIDIA’s Hyperrealistic Face Generator](https://www.youtube.com/watch?v=kSLJriaOumA) * Extra: https://www.thispersondoesnotexist.com/ ] --- ## Find Waldo .center[ <video height="350" controls> <source src="https://dl.dropboxusercontent.com/s/g328h7e9qum39t5/find-waldo.mp4#t=20,31" type="video/mp4"> </video> ] .footnote[ <hr> * Source: YouTube - [There's Waldo is a robot that finds Waldo](https://www.youtube.com/watch?v=-i7HMPpxB-Y) ] --- ### Basic math concepts in neural networks .smaller[ **Tensors** are a generalization of vectors and matrices to an arbitrary number of dimensions .left-column-half[ * Scalars (0D tensors) ```r x <- 10 ``` * Vectors (1D tensors) ```r x <- c(12, 3, 6, 14, 10) str(x) ``` ``` ## num [1:5] 12 3 6 14 10 ``` ```r dim(as.array(x)) ``` ``` ## [1] 5 ``` That's a five-dimensional vector. Don’t confuse a 5D vector with a 5D tensor! ] .right-column-half[ * Matrices (2D tensors) ```r x <- matrix(rep(0, 3*5), nrow=3, ncol=5) dim(x) ``` ``` ## [1] 3 5 ``` * 3D and higher-dimensional tensors ```r x <- array(rep(0,2*3*2), dim=c(2,3,2)) dim(x) ``` ``` ## [1] 2 3 2 ``` By packing 3D tensors in an array, you can create a 4D tensor, and so on. In DL, you’ll generally manipulate tensors that are 0D to 4D, although you may go up to 5D if you process video data. ] ] --- ## Key attributes A tensor is defined by three key attributes: * **Number of axes (rank)**: For instance, a 3D tensor has three axes, and a matrix has two axes. * **Shape**: This is an integer vector that describes how many dimensions the tensor has .red[along each axis]. For instance, the previous matrix example has shape `(3, 5)`, and the 3D tensor example has shape `(2, 3, 2)`. * **Data type**: This is the type of the data contained in the tensor; for instance, a tensor's type could be integer or double. --- To make this more concrete, let's look at an example: .red[**MNIST database**] (Modified National Institute of Standards and Technology database) * A large database of handwritten digits that is commonly used for training various image processing systems * Contains 60,000 training images and 10,000 testing images * 28 x 28 gray-scale images of handwritten digits like these * An 8-bit integer giving a range of possible values from 0 to 255 * Typically zero is taken to be black, and 255 is taken to be white .center[<img src="https://dl.dropboxusercontent.com/s/u4qemv2ts514a02/fig_mnist_groundtruth.png" alt="MNIST" width="800">] .footnote[ <hr> Image source: https://ml4a.github.io/ml4a/neural_networks/ ] --- ```r mnist <- dataset_mnist() # get the data from the internet; ~200MB #mnist <- read_rds("mnist.rds") # if you have saved the data locally x_train <- mnist$train$x y_train <- mnist$train$y x_test <- mnist$test$x y_test <- mnist$test$y ``` .smaller[ .left-column-half[ Next, we display the number of axes of the tensor `x_train`: ```r length(dim(x_train)) ``` ``` ## [1] 3 ``` Here’s its shape: ```r dim(x_train) ``` ``` ## [1] 60000 28 28 ``` And this is its data type: ```r typeof(x_train) ``` ``` ## [1] "integer" ``` ] .right-column-half[ So what we have here is a 3D tensor of integers. More precisely, it’s an array of 60,000 matrices of 28 × 28 integers. Each such matrix is a gray-scale image, with coefficients between 0 and 255. Let’s plot the fifth digit in this 3D tensor: ```r digit <- x_train[5,,] plot(as.raster(digit, max = 255)) ``` <!-- --> ] ] --- ## Tips **Cache the dataset locally** Reading data from the Internet is slow. If we are going to work on this data set multiple times, it's faster if we save the data to our working directory so that we can read it back later. ```r write_rds(mnist, "mnist.rds") mnist <- read_rds("mnist.rds") ``` **Using the multi-assignment (`%<-%`) operator** The multi-assignment version is preferable because it's more compact. The datasets built into Keras are all nested lists of training and test data. Here, we use the multi-assignment operator (`%<-%`) from the `zeallot` package to unpack the list into a set of distinct variables. ```r c(c(x_train, y_train), c(x_test, y_test)) %<-% mnist ``` --- ## Manipulating tensors in R In the previous example, we _selected_ a specific digit alongside the first axis using the syntax `train_images[i,,]`. Selecting specific elements in a tensor is called **tensor slicing**. The following example selects digits #10 to #99 and puts them in an array of shape `(90, 28, 28)`: ```r my_slice <- x_train[10:99,,] dim(my_slice) ``` ``` ## [1] 90 28 28 ``` Equivalently, ```r my_slice <- x_train[10:99,1:28,1:28] dim(my_slice) ``` ``` ## [1] 90 28 28 ``` --- ## The notion of data batches In general, the first axis in all data tensors you'll come across in deep learning will be the .red[_samples axis_] (sometimes called the _samples dimension_). In the MNIST example, samples are images of digits. Deep-learning models don't process an entire dataset at once; rather, they break the data into small batches. Concretely, here's one batch of our MNIST digits, with batch size of 128: ```r batch <- x_train[1:128,,] ``` And here's the next batch: ```r batch <- x_train[129:256,,] ``` When considering such a batch tensor, the first axis is called the _batch axis_ or _batch dimension_. This is a term you'll frequently encounter when using deep-learning libraries. --- ## Real-world examples of data tensors **Vector data**—2D tensors of shape (samples, features) **Time series data or sequence data**—3D tensors of shape (samples, .green[timesteps], features) .left-column-large[ **Images**—4D tensors of shape (samples, height, width, .red[channels]) or (samples, .red[channels], height, width) .small[ * Some DL libraries use the _channels-last_ convention (TensorFlow) while others use the _channels-first_ convention (Theano) * A batch of 128 gray-scale images of size 256 × 256 could thus be stored in a tensor of shape `(128, 256, 256, 1)`, and a batch of 128 color images could be stored in a tensor of shape `(128, 256, 256, 3)` ] **Video**—5D tensors of shape (samples, .blue[frames], height, width, channels) or (samples, .blue[frames], channels, height, width) ] .right-column-small[ .center[<img src="https://dl.dropboxusercontent.com/s/swmnq31dwoa210i/4D-tensor.PNG" alt="4D tensor">] ] --- .left-column-6[ ## Quiz Suppose we have a 5 minutes HD (1920×1080) YouTube video clip which runs 30 frames per second. How many values are in this video data tensor? * How many sample? * How many frames? * How many channels? * Height? * Width? Total? ] .right-column-4[ .right[<img src="https://dl.dropboxusercontent.com/s/haufkphp3vrkbxg/Quiz-Time.svg" alt=" " height="250">] ] --- ## Deep learning frameworks You typically won't need to build deep learning applications from scratch. Most people use an existing deep learning framework/toolkit to help them build their applications. Popular deep learning frameworks: * [TensorFlow](https://www.tensorflow.org/): Powerful, sophisticated, but difficult to learn. Backed by Google. * [Keras](https://keras.io/): Runs on top of TensorFlow, Theano, or CNTK; not as sophisticated as TensorFlow but still powerful; beginner friendly * [PyTorch](https://pytorch.org/): Powerful and flexible for customization and fast prototyping; backed by Facebook. * ... Although TensorFlow and Keras are written in Python, there are R interfaces that allow you to use these frameworks in R (see [here](https://tensorflow.rstudio.com/) and [here](https://keras.rstudio.com/)). --- Jeff Hale made a very interesting summary and comparison for these frameworks: .center[<img src="https://dl.dropboxusercontent.com/s/fgkm1p86ldxpasg/Deep%20Learning%20Framework%20Power%20Scores%202018.png" alt=" join" height="450">] .footnote[ <hr> Source: https://towardsdatascience.com/deep-learning-framework-power-scores-2018-23607ddf297a ] --- class: center, middle <img src="https://dl.dropboxusercontent.com/s/66t8bsdcsqu8k3n/keras-logo-2018-large-1200.png" alt="Keras" height="200"> ### We will learn how to use the R interface to Keras for deep learning --- ### Developing with Keras: a quick overview **1. Prepare the data** ```r str(mnist) ``` ``` ## List of 2 ## $ train:List of 2 ## ..$ x: int [1:60000, 1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ... ## ..$ y: int [1:60000(1d)] 5 0 4 1 9 2 1 3 1 4 ... ## $ test :List of 2 ## ..$ x: int [1:10000, 1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ... ## ..$ y: int [1:10000(1d)] 7 2 1 0 4 1 4 9 5 9 ... ``` ```r ## The x data is a 3-d array (images,width,height) of of grayscale values # View(mnist$train$x[1,,]) # To see the first training example x_train <- mnist$train$x y_train <- mnist$train$y x_test <- mnist$test$x y_test <- mnist$test$y str(x_train) ``` ``` ## int [1:60000, 1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ... ``` --- ```r ## Reshape x from 3d to 2d (by default, row-major) x_train <- array_reshape(x_train, c(nrow(x_train), 784)) x_test <- array_reshape(x_test, c(nrow(x_test), 784)) str(x_train) # View(x_train[1,]) ``` ``` ## num [1:60000, 1:784] 0 0 0 0 0 0 0 0 0 0 ... ``` .center[<img src="https://dl.dropboxusercontent.com/s/z5wnfa18ohgxlwk/mnist-input.png.png" alt="mnist-input" width="750">] .footnote[ <hr> Image source: https://ml4a.github.io/ml4a/neural_networks/ ] --- ```r ## Rescale values so that they are between 0 and 1 x_train <- x_train / 255 x_test <- x_test / 255 # View(x_train[1,]) ## Prepare the y data ## The y data is an integer vector with values ranging from 0 to 9 str(y_train) # View(y_train) ``` ``` ## int [1:60000(1d)] 5 0 4 1 9 2 1 3 1 4 ... ``` ```r ## Deep learning models prefer binary values rather than integers y_train <- to_categorical(y_train, 10) y_test <- to_categorical(y_test, 10) str(y_train) # View(y_train) ``` ``` ## num [1:60000, 1:10] 0 1 0 0 0 0 0 0 0 0 ... ``` --- **2. Defining the Model** ```r ## A sequential model is just a linear stack of layers model <- keras_model_sequential() ## Define the structure of the neural net model %>% # A dense layer is a fully connected layer layer_dense(units = 256, activation = 'relu', input_shape = c(784)) %>% layer_dropout(rate = 0.4) %>% # randomly set 40% of weights to 0 layer_dense(units = 128, activation = 'relu') %>% layer_dropout(rate = 0.3) %>% # this helps prevent overfitting layer_dense(units = 10, activation = 'softmax') # probability of each class summary(model) ``` ``` ## Model: "sequential" ## ________________________________________________________________________________ ## Layer (type) Output Shape Param # ## ================================================================================ ## dense_2 (Dense) (None, 256) 200960 ## dropout_1 (Dropout) (None, 256) 0 ## dense_1 (Dense) (None, 128) 32896 ## dropout (Dropout) (None, 128) 0 ## dense (Dense) (None, 10) 1290 ## ================================================================================ ## Total params: 235,146 ## Trainable params: 235,146 ## Non-trainable params: 0 ## ________________________________________________________________________________ ``` --- .left-column-half[ **ReLU**: rectified linear unit .center[$$R(x)=max(0,x)$$] ReLU activations are the simplest non-linear activation function. Research has shown that ReLUs result in much faster training for large networks. .center[<img src="https://dl.dropboxusercontent.com/s/inxftqu9jui07ca/relu.png" alt="ReLU" height="260"> ] ] .right-column-half[ **Softmax**: normalized exponential function .center[ `\(\sigma(z_j)=\frac{e^{z_j}}{\sum_{k=1}^{K}e^{z_k}}\)` for all `\(j=1,\dots,K\)` ] Softmax squashes values in a vector to be between 0 and 1. So the outputs represent the probability of each class category.  ] .footnote[ <hr> Image sources: 1. https://ml4a.github.io/ml4a/neural_networks/ 2. https://github.com/Kulbear/deep-learning-nano-foundation/wiki/ReLU-and-Softmax-Activation-Functions ] --- Choosing the right **last-layer activation and loss function**: | Problem type | Last-layer activation | Loss function | |-----------------------------------------|-----------------------|--------------------------------| | Binary classification | `sigmoid` | `binary_crossentropy` | | Multiclass, single-label classification | `softmax` | `categorical_crossentropy` | | Multiclass, multilabel classification | `sigmoid` | `binary_crossentropy` | | Regression to arbitrary values | None | `mse` | | Regression to values between 0 and 1 | `sigmoid` | `mse` or `binary_crossentropy` | --- ```r ## Compile the model with appropriate loss function, optimizer, and metrics model %>% compile( optimizer = "adam", # see next slide loss = "categorical_crossentropy", # since we have 10 categoreis metrics = c("accuracy") # for classification ) ``` Before training a model, you need to **configure the learning process**, which is done via the `compile` method. It receives three arguments: * An optimizer. This could be the string identifier of an existing optimizer (such as `adam`, `rmsprop`, or `adagrad`), or an instance of the Optimizer class. [List of existing optimizers](https://keras.io/optimizers/) * A loss function. This is the objective that the optimizer will try to minimize. It can be the string identifier of an existing loss function, or it can be a customized objective function. [List of existing loss functions](https://keras.io/losses/) * A list of metrics. A metric is used to judge the performance of your model. This is only for you to look at and has nothing to do with the optimization process. A metric could be the string identifier of an existing metric or a custom metric function. [List of existing metrics](https://keras.io/metrics/) --- Each of the optimizers is a specific variant of **stochastic gradient descent (SGD)**. SGD optimizers explore the loss surface based on the slope (gradient) of the current state. To get to the top as fast as they can, they take big steps in the steepest direction and do smaller and smaller steps as they come further to the top to avoid overshooting it. .red[**The Bird Box challenge**] .left-column-small[ .left[<img src="https://dl.dropboxusercontent.com/s/rz8nccobi1o303x/bird-box.jpg" alt="Bird Box" height="350">] ] .right-column-large[ .right[<img src="https://dl.dropboxusercontent.com/s/v871ghhjbuqnxen/SGD-illustration.png" alt="A hill" height="350">] ] --- **The learning process dynamics of different optimizers:** .left-column-half[  Contours of a loss surface and time evolution of different optimization algorithms ] .left-column-half[  .right[ A saddle point in the optimization landscape, where the curvature along different dimension has different signs (one dimension curves up and another down) ] ] .footnote[ <hr> Image source: http://cs231n.github.io/neural-networks-3/ ] --- **3. Training and Evaluation** ```r ## Use x_train and y_train for training history <- model %>% fit( x_train, y_train, batch_size = 128, # a set of 128 samples epochs = 30, # let's go through x_train 30 times validation_split = 0.2 # use the last 20% of train data for validation ) plot(history) ``` <!-- --> --- ```r ## Use x_test and y_test for evaluation model %>% evaluate(x_test, y_test) ``` ``` ## loss accuracy ## 0.1222683 0.9801000 ``` ```r model %>% predict(x_test) %>% k_argmax() %>% as.integer() ``` ``` ## [1] 7 2 1 0 4 1 4 9 5 9 0 6 9 0 1 5 9 7 3 4 9 6 6 5 4 0 7 4 0 1 3 1 3 4 7 2 7 ## [38] 1 2 1 1 7 4 2 3 5 1 2 4 4 6 3 5 5 6 0 4 1 9 5 7 8 9 3 7 4 6 4 3 0 7 0 2 9 ## [75] 1 7 3 2 9 7 7 6 2 7 8 4 7 3 6 1 3 6 9 3 1 4 1 7 6 9 ## [ reached getOption("max.print") -- omitted 9900 entries ] ``` --- **4. Save and Reuse** ```r # save the model to your current working directory model %>% save_model_hdf5("mnist_model.h5") ``` The following components of the model are saved: * The model architecture, allowing to re-instantiate the model. * The model weights. * The state of the optimizer, allowing to resume training exactly where you left off. This allows you to save the entirety of the state of a model in a single file. Saved models can be re-instantiated via `load_model_hdf5()`. The model returned by `load_model_hdf5()` is a compiled model ready to be used. ```r # read from your current working directory # make sure you have the file in that folder new_model <- load_model_hdf5("mnist_model.h5") ``` --- ## Convolutional neural networks Also known as CNNs or **convnets** Convnets are a type of deep neural networks that have been extensively used in computer vision applications. .center[<img src="https://dl.dropboxusercontent.com/s/4tr1854bqniy11b/convnet-illustration.jpeg" alt="convnet" height="350">] .footnote[ <hr> Image Source: https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53 ] --- ### Instantiating a small convnet A basic convnet looks like a stack of `layer_conv_2d` and `layer_max_pooling_2d` layers. ```r model <- keras_model_sequential() %>% layer_conv_2d(input_shape = c(28, 28, 1), # input image shape filters = 32, # output channel size kernel_size = c(3, 3), activation = "relu") %>% layer_max_pooling_2d(pool_size = c(2, 2)) %>% layer_conv_2d(filters = 64, kernel_size = c(3, 3), activation = "relu") %>% layer_max_pooling_2d(pool_size = c(2, 2)) %>% layer_conv_2d(filters = 64, kernel_size = c(3, 3), activation = "relu") ``` Importantly, a convnet takes as input tensors of shape `(image_height, image_width, image_channels)` (not including the batch dimension). In this case, we’ll configure the convnet to process inputs of size `(28, 28, 1)`, which is the format of MNIST images. We’ll do this by passing the argument `input_shape = c(28, 28, 1`) to the first layer. --- Let's display the architecture of the convnet so far: ```r summary(model) ``` ``` ## Model: "sequential_1" ## ________________________________________________________________________________ ## Layer (type) Output Shape Param # ## ================================================================================ ## conv2d_2 (Conv2D) (None, 26, 26, 32) 320 ## max_pooling2d_1 (MaxPooling2D) (None, 13, 13, 32) 0 ## conv2d_1 (Conv2D) (None, 11, 11, 64) 18496 ## max_pooling2d (MaxPooling2D) (None, 5, 5, 64) 0 ## conv2d (Conv2D) (None, 3, 3, 64) 36928 ## ================================================================================ ## Total params: 55,744 ## Trainable params: 55,744 ## Non-trainable params: 0 ## ________________________________________________________________________________ ``` Observations: * The output of every `layer_conv_2d` and `layer_max_pooling_2d` is a 3D tensor of shape `(height, width, channels)`. * The width and height dimensions tend to shrink as we go deeper in the network. * The number of channels is controlled by `filters` (32 or 64), the first argument passed to `layer_conv_2d`. --- ### Adding a classifier on top of the convnet ```r model <- model %>% layer_flatten() %>% # from 3d tensor to 1d tensor layer_dense(units = 64, activation = "relu") %>% layer_dense(units = 10, activation = "softmax") summary(model) ``` ``` ## Model: "sequential_1" ## ________________________________________________________________________________ ## Layer (type) Output Shape Param # ## ================================================================================ ## conv2d_2 (Conv2D) (None, 26, 26, 32) 320 ## max_pooling2d_1 (MaxPooling2D) (None, 13, 13, 32) 0 ## conv2d_1 (Conv2D) (None, 11, 11, 64) 18496 ## max_pooling2d (MaxPooling2D) (None, 5, 5, 64) 0 ## conv2d (Conv2D) (None, 3, 3, 64) 36928 ## flatten (Flatten) (None, 576) 0 ## dense_4 (Dense) (None, 64) 36928 ## dense_3 (Dense) (None, 10) 650 ## ================================================================================ ## Total params: 93,322 ## Trainable params: 93,322 ## Non-trainable params: 0 ## ________________________________________________________________________________ ``` --- ### Training the convnet on MNIST images ```r # mnist <- dataset_mnist() # get the data from the internet; ~200MB # write_rds(mnist, "mnist.rds") mnist <- read_rds("mnist.rds") # assuming that you have saved the data locally c(c(x_train, y_train), c(x_test, y_test)) %<-% mnist x_train <- array_reshape(x_train, c(60000, 28, 28, 1)) x_train <- x_train / 255 x_test <- array_reshape(x_test, c(10000, 28, 28, 1)) x_test <- x_test / 255 y_train <- to_categorical(y_train) y_test <- to_categorical(y_test) model %>% compile( optimizer = "adam", loss = "categorical_crossentropy", metrics = c("accuracy") ) model %>% fit( x_train, y_train, epochs = 5, batch_size=64 ) # To keep it simple, we only use smaller epochs and batch_size ``` --- Let's evaluate the model on the test data: ```r results <- model %>% evaluate(x_test, y_test) results ``` ``` ## $loss ## [1] 0.03152971 ## ## $acc ## [1] 0.9919 ``` -- .left-column-half[ Even thought the convent is small and we use smaller `epochs` and `batch_size`, the final accuracy turns out higher than the network we built previously (0.9801)! **WHY?** To answer this, let's dive into what `layer_conv_2d` and `layer_max_pooling_2d` do. ] .right-column-half[  ] --- ## The convolution operation The fundamental difference between a densely connected layer and a convolution layer is this: **dense layers learn .red[global] patterns in their input feature space** (for example, for an MNIST digit, patterns involving all pixels), **whereas convolution layers learn .red[local] patterns**. In the case of images, patterns are found in small 2D windows of the inputs. In the previous example, these windows were all 3 x 3 (that is, `kernel_size`). -- .red[**Image kernels**]: An image kernel is a small matrix used to apply effects like the ones we might find in Photoshop or Gimp, such as blurring, sharpening, outlining or embossing. They're also used in machine learning for 'feature extraction', a technique for determining the most important portions of an image. In this context the process is referred to more generally as ".red[_convolution_]" .larger[[**#DEMO**](http://setosa.io/ev/image-kernels/)] .footnote[ <hr> Source: http://setosa.io/ev/image-kernels/ ] --- **The convolution operation leads to two interesting properties of convnets:** -- * **The patterns they learn are translation invariant** * After learning a certain pattern in the lower-right corner of a picture, a convnet can recognize it anywhere: for example, in the upper-left corner. * Makes convnets efficient when processing images because the visual world is fundamentally translation invariant * Need fewer training samples to learn representations -- .left-column-half[ * **They can learn spatial hierarchies of patterns** * A first convolution layer will learn small local patterns such as edges, a second convolution layer will learn larger patterns made of the features of the first layers, and so on. ] .right-column-half[  ] --- ## The max-pooling operation .smaller[ The role of max pooling is to aggressively .red[**"downsample"**] the output from the previous layer. In our model, the first `layer_max_pooling_2d` reduces the shape from 26 to 13 and the second one from 11 to 5. ```r summary(model) ``` ``` ## Model: "sequential_1" ## ________________________________________________________________________________ ## Layer (type) Output Shape Param # ## ================================================================================ ## conv2d_1 (Conv2D) (None, 26, 26, 32) 320 ## max_pooling2d_1 (MaxPooling2D) (None, 13, 13, 32) 0 ## conv2d_2 (Conv2D) (None, 11, 11, 64) 18496 ## max_pooling2d_2 (MaxPooling2D) (None, 5, 5, 64) 0 ## conv2d_3 (Conv2D) (None, 3, 3, 64) 36928 ## flatten_1 (Flatten) (None, 576) 0 ## dense_1 (Dense) (None, 64) 36928 ## dense_2 (Dense) (None, 10) 650 ## ================================================================================ ## Total params: 93,322 ## Trainable params: 93,322 ## Non-trainable params: 0 ## ________________________________________________________________________________ ``` ] --- **Why downsampling?** The reason to use downsampling is to reduce the number of output coefficients to process, as well as to induce spatial-filter hierarchies by making successive convolution layers look at increasingly large windows. .center[<img src="https://dl.dropboxusercontent.com/s/69ypc2wwzkirdjt/maxpool.svg" alt="max-pooling" height="300">] --- ### Other advanced topics in computer vision .red[**Use a pretrained convnet**] The following image classification models, pre-trained on ImageNet (1.4 million labeled images and 1,000 different classes), are available in keras: .left-column-half[ * [Xception](https://keras.rstudio.com/reference/application_xception.html) * [VGG16](https://keras.rstudio.com/reference/application_vgg.html) * [VGG19](https://keras.rstudio.com/reference/application_vgg.html) * [ResNet50](https://keras.rstudio.com/reference/application_resnet50.html) * [InceptionV3](https://keras.rstudio.com/reference/application_inception_v3.html) ] .right-column-half[ * [InceptionResNetV2](https://keras.rstudio.com/reference/application_inception_resnet_v2.html) * [MobileNet](https://keras.rstudio.com/reference/application_mobilenet.html) * [MobileNetV2](https://keras.rstudio.com/reference/application_mobilenet_v2.html) * [DenseNet](https://keras.rstudio.com/reference/application_densenet.html) * [NASNet](https://keras.rstudio.com/reference/application_nasnet.html) ] .center[<img src="https://dl.dropboxusercontent.com/s/vn65xpcz29tnj1q/image-net.png" alt="ImageNet" height="250">] --- ### Other advanced topics in computer vision .red[**Data augmentation to avoid overfitting**] * **Overfitting** is a very common issue when training complex models with small datasets * Through data augmentation, we artificially generate additional training instances from existing training data, by augmenting the samples via a number of random transformations, to mitigate the risk of overfitting .center[<img src="https://dl.dropboxusercontent.com/s/zky2pqycqe7mr2l/bogi.data.augmentation.png" alt="" height="300"> ] --- ### Other advanced topics in computer vision .red[**Visualize convnets**] * Visualizing intermediate convnet outputs (intermediate activations) * Visualizing convnet filters * Visualizing heatmaps of class activation .left-column-half[  ] .right-column-half[  ] --- ## Summary * Deep learning is a powerful tool, especially for handling unstructured data * We learned how/why it works * We can implement it in a few lines of code * Analyzing images and beyond (video, text, time-series, ...) * Provided that you have an adequate computer to train/test the neural networks * Sagar Samtani, Hongyi Zhu, Balaji Padmanabhan, Yidong Chai, and Hsinchun Chen. (2020) “**Deep Learning for Information Systems Research**,” arXiv:2010.05774 [cs, stat] (available at http://arxiv.org/abs/2010.05774) --- class: center, inverse <br> #Questions? <hr> <br> ## Intro to Deep Learning ### (and Computer Vision) <br> ### Yu-Kai Lin #### Presented at CIS 9390<br> April 15, 2022