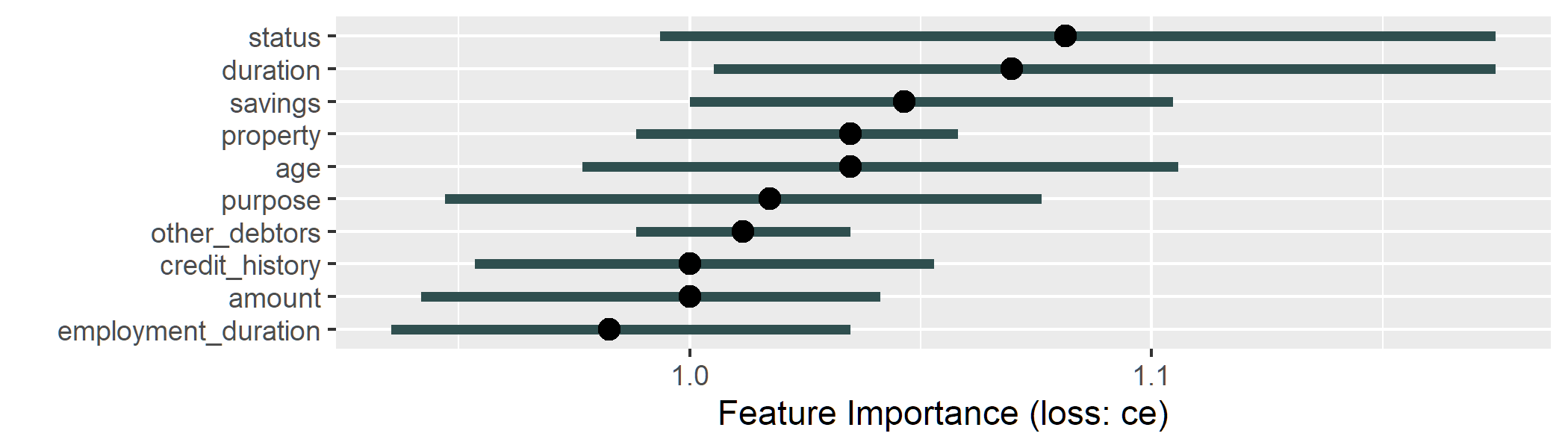

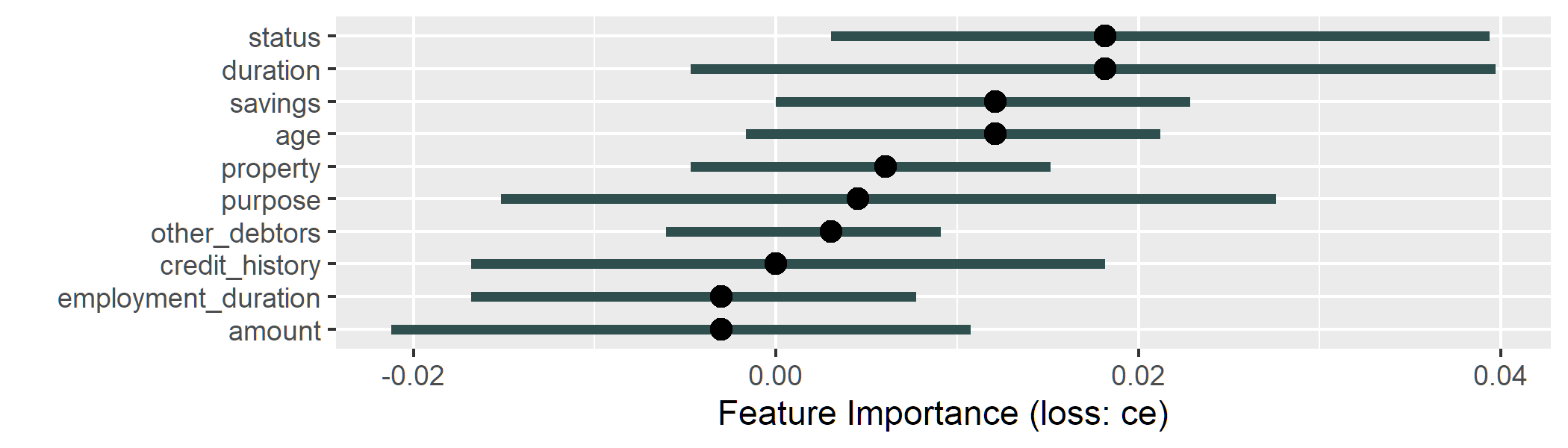

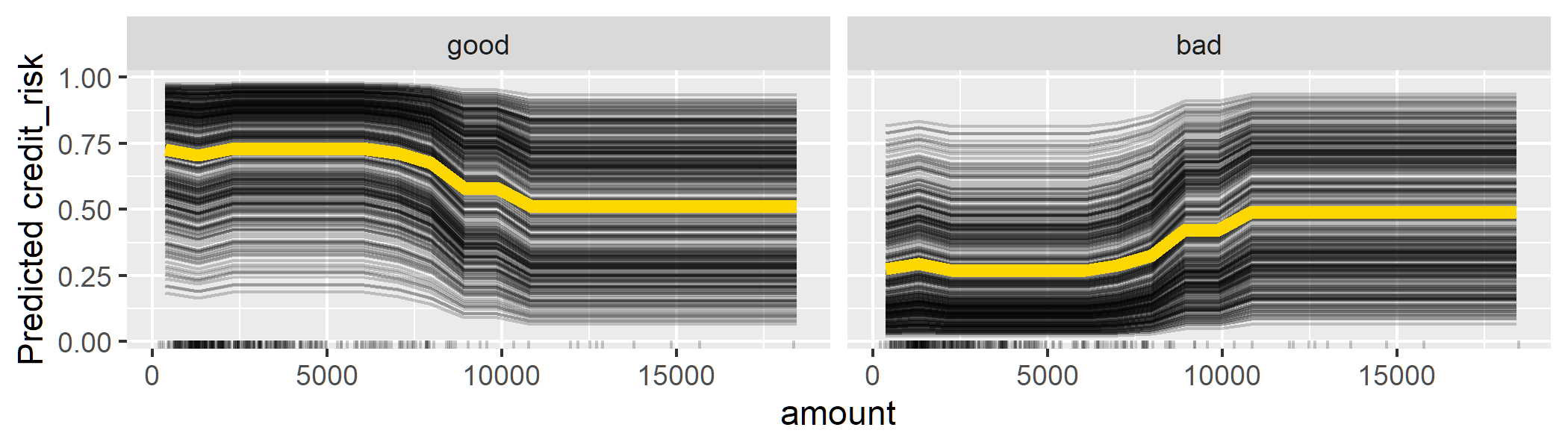

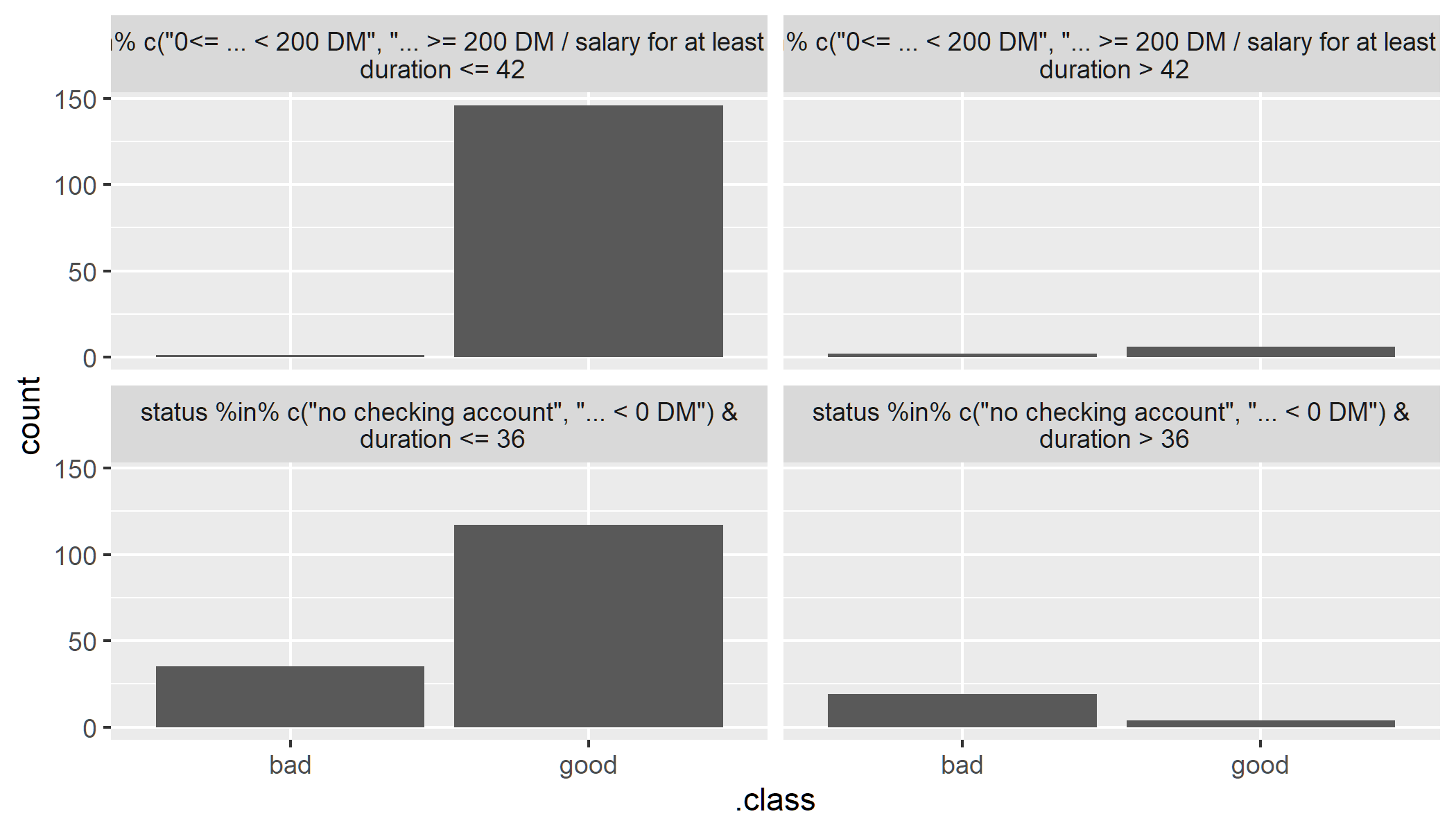

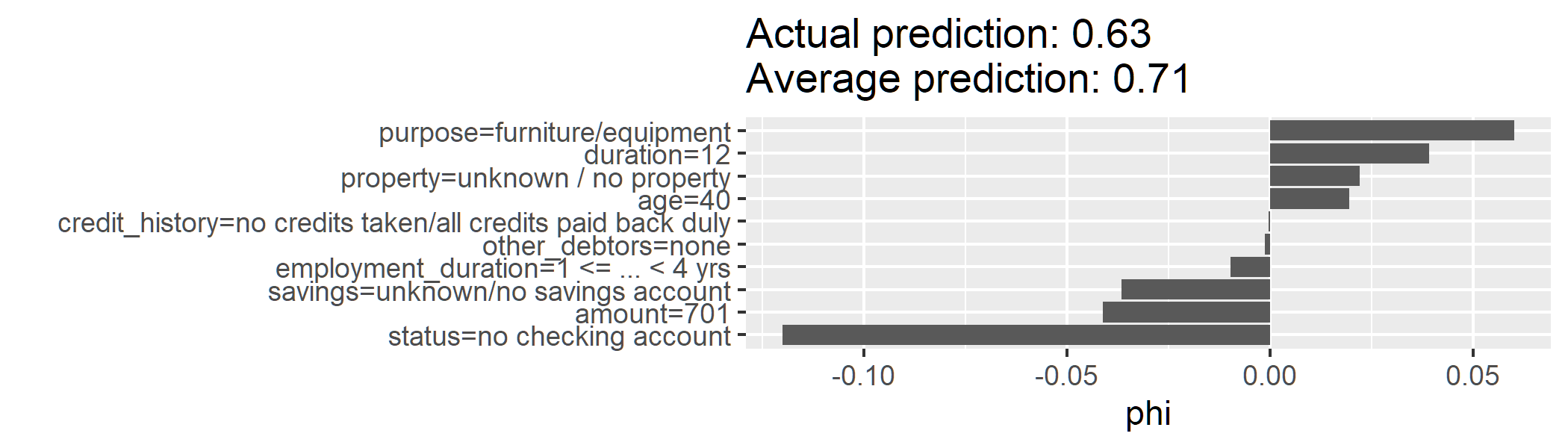

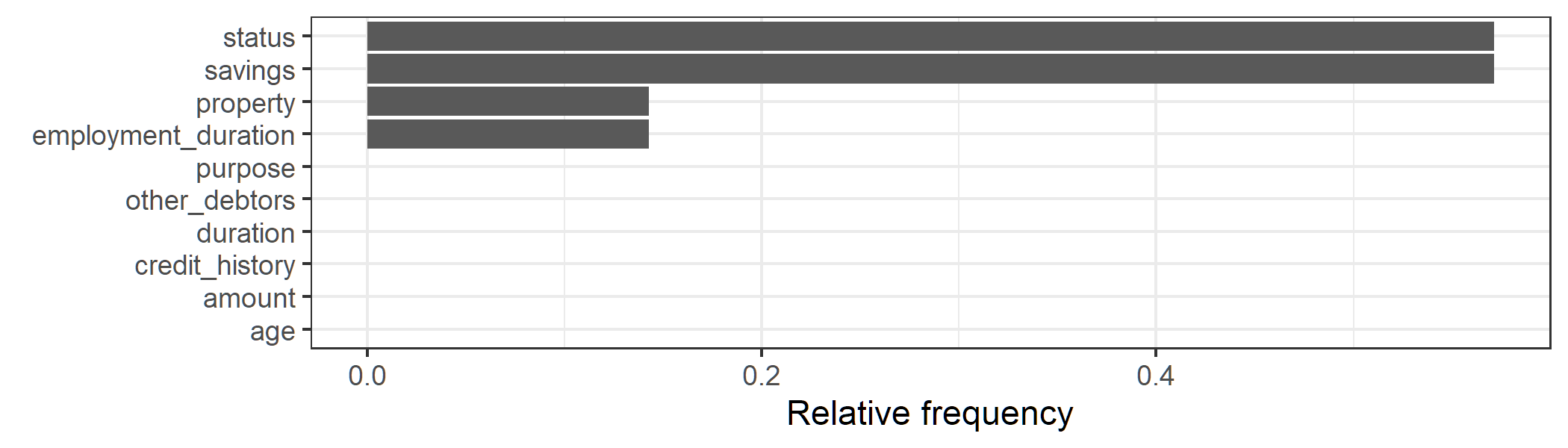

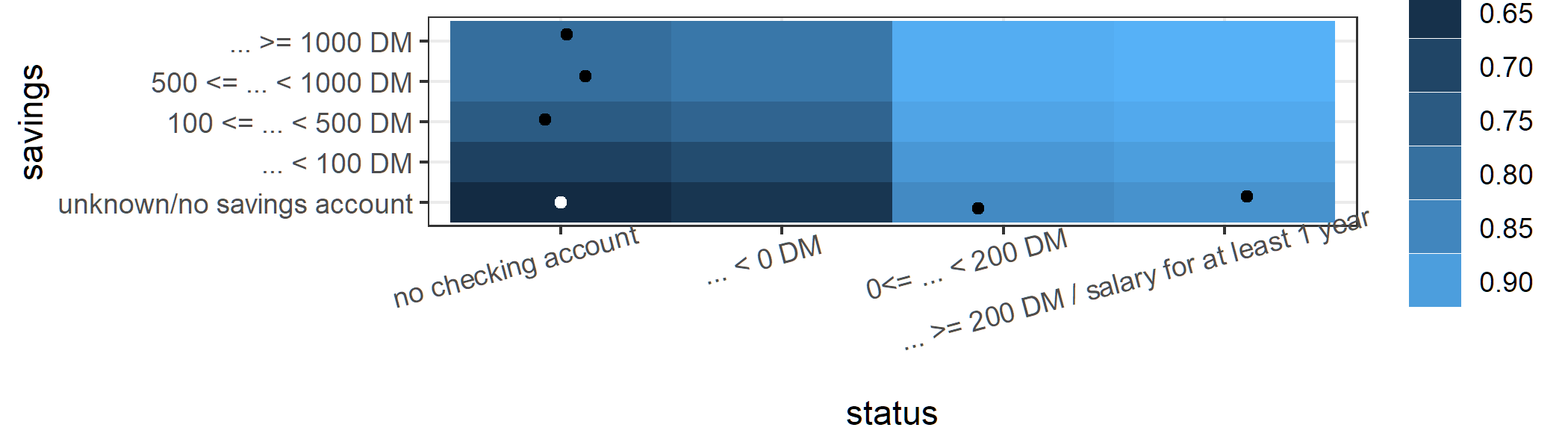

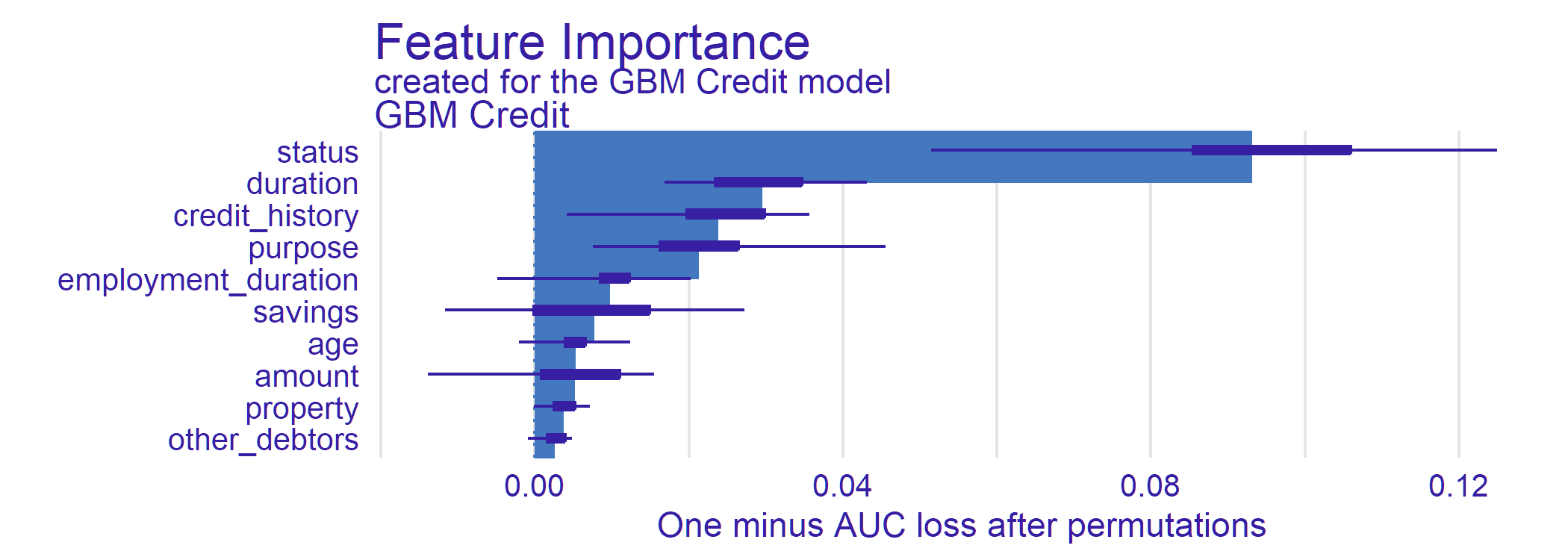

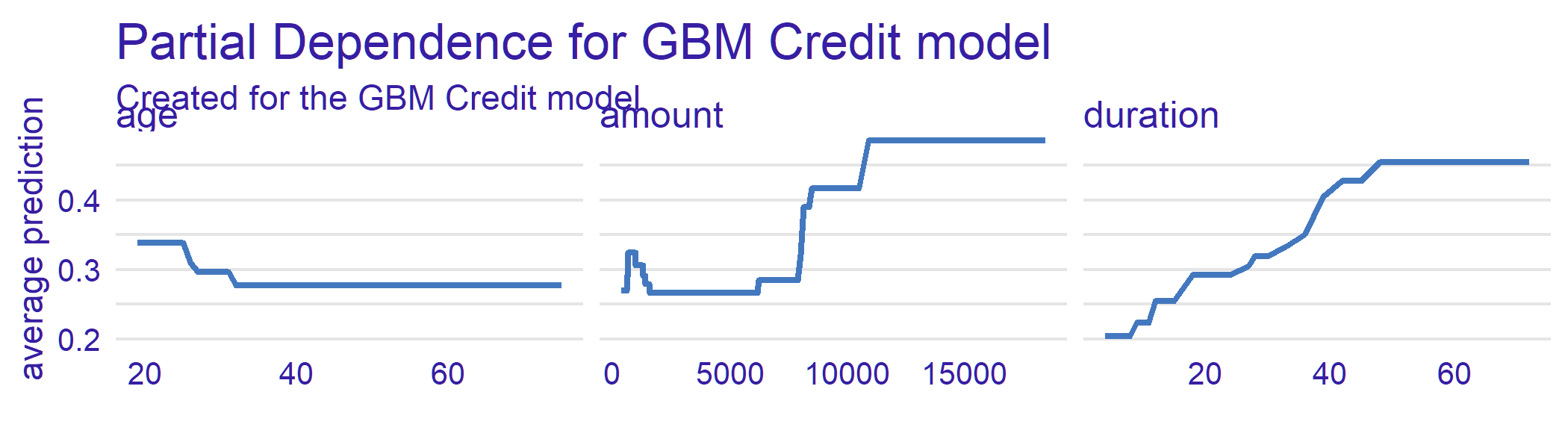

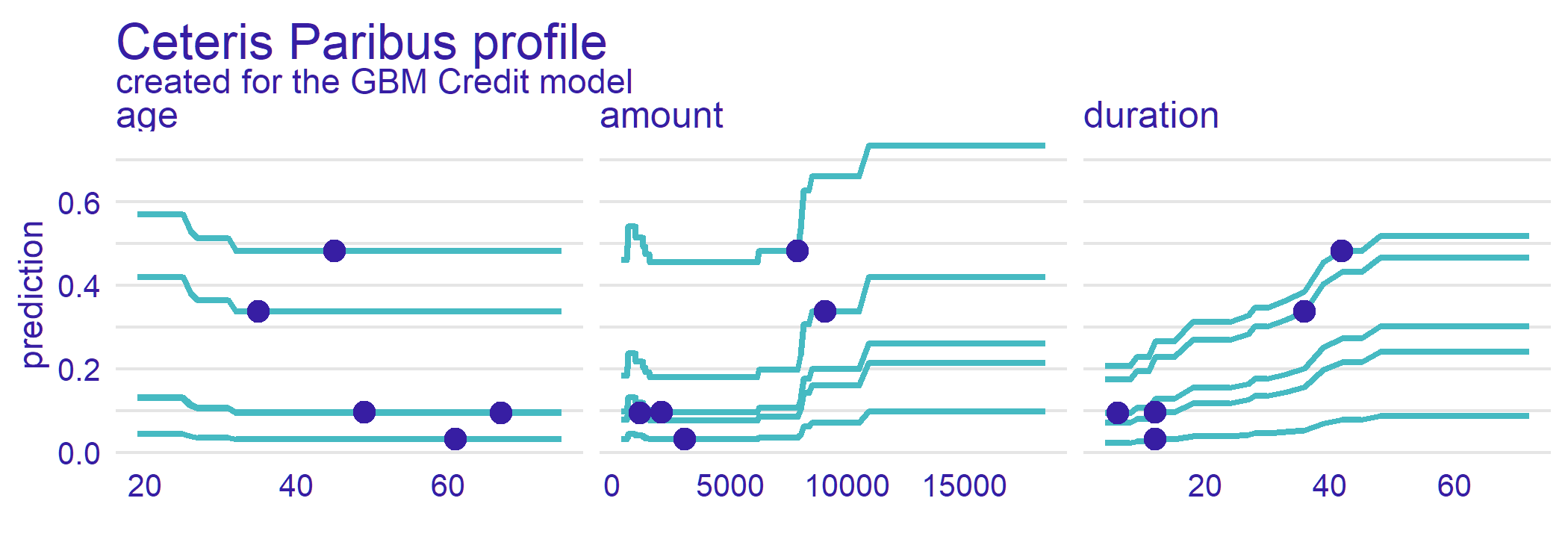

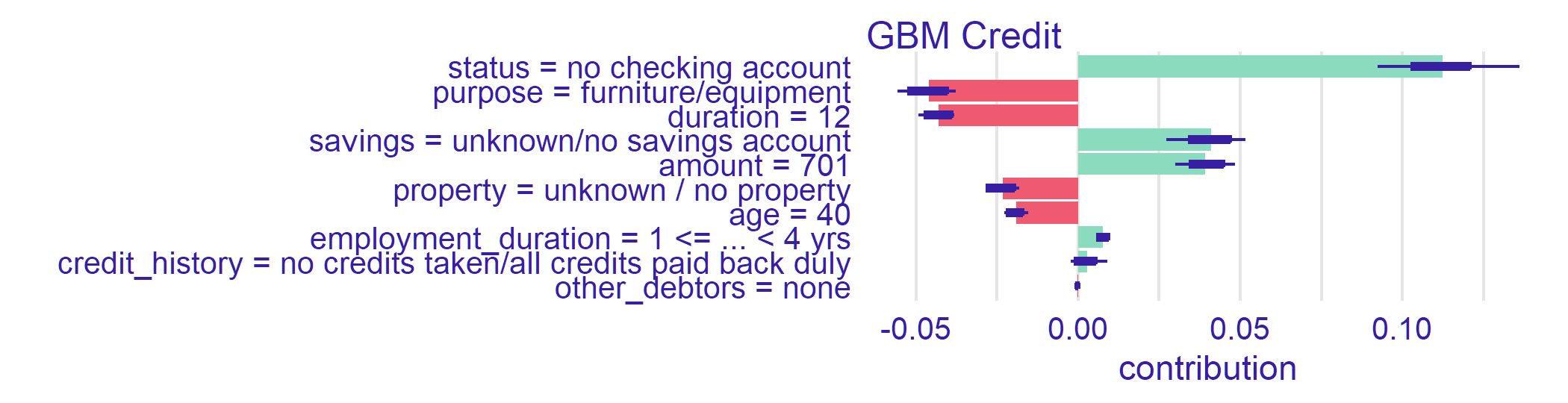

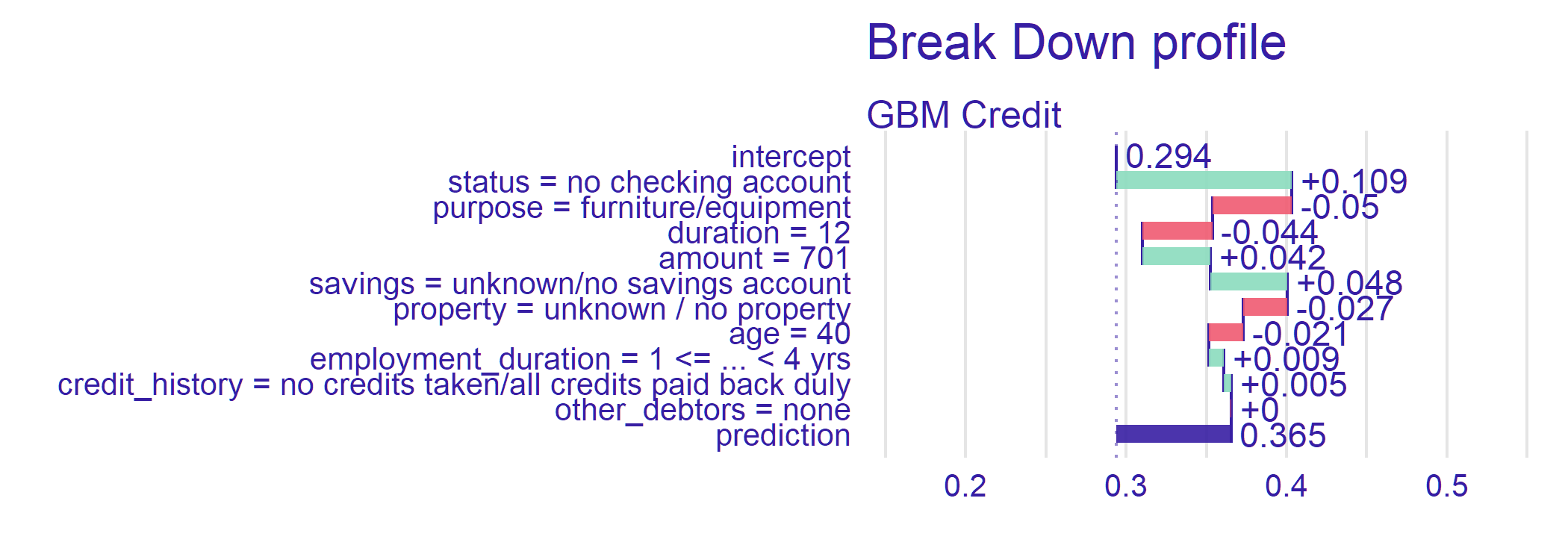

class: top, left, inverse, title-slide .title[ # Introduction to eXplainable AI (XAI) ] .author[ ### Yu-Kai Lin<br><br><br>Presented at CIS 9390<br> April 5, 2024 ] --- <style>.xe__progress-bar__container { top:0; opacity: 1; position:absolute; right:0; left: 0; } .xe__progress-bar { height: 0.25em; background-color: #0051BA; width: calc(var(--slide-current) / var(--slide-total) * 100%); } .remark-visible .xe__progress-bar { animation: xe__progress-bar__wipe 200ms forwards; animation-timing-function: cubic-bezier(.86,0,.07,1); } @keyframes xe__progress-bar__wipe { 0% { width: calc(var(--slide-previous) / var(--slide-total) * 100%); } 100% { width: calc(var(--slide-current) / var(--slide-total) * 100%); } }</style> # Prerequisites ```r install.packages(c( "iml", # Interpretable Machine Learning "gbm", # Generalized Boosted Models "glmnet", # Lasso Regularized Generalized Linear Models "mlr3verse", # The 'mlr3' Package Family for ML Workflow "devtools", # To install packages from GitHub "partykit", # A Toolkit for Recursive Partytioning "counterfactuals", # Counterfactual Explanations "DALEX", "DALEXtra" # moDel Agnostic Language for Exploration & eXplanation )) # To get additional mlr3 learners devtools::install_github("mlr-org/mlr3extralearners@*release") library(mlr3verse) library(mlr3extralearners) library(iml) library(counterfactuals) library(DALEX) library(DALEXtra) ``` --- # Agenda * Motivations and taxonomy of XAI methods * The `iml` package: a unified interface for model-agnostic interpretation methods * Feature Importance * Feature Effects * Surrogate Models * Shapley Values * The `counterfactuals` package * Counterfactual explanations * The `DALEX` package: for explanatory model analysis (EMA) * Global EMA * Local EMA .footnote[ <hr> [**Acknowledgement**] The materials in the following slides are largely based on the source below: * [Ch 12: Model Interpretation](https://mlr3book.mlr-org.com/chapters/chapter12/model_interpretation.html) of [Applied Machine Learning Using mlr3 in R](https://mlr3book.mlr-org.com/) by Bernd Bischl, Raphael Sonabend, Lars Kotthoff, Michel Lang ] --- # The dark secret at the heart of AI .bg-washed-green.b--dark-green.ba.bw2.br3.shadow-5.ph4.mt5[ No one really knows how the most advanced algorithms do what they do. That could be a problem. .tr[ -- Will Knight<br/> [MIT Technology Review](https://www.technologyreview.com/2017/04/11/5113/the-dark-secret-at-the-heart-of-ai/) ]] --- ## Explainability in AI/ML * The vast majority of machine learning (ML) models are designed to make good predictions, and they typically perform really well in that regard * As artificial intelligence (AI) becomes more advanced, humans are challenged to comprehend and retrace how the algorithm came to a result * .red[**Explainability**] is the degree to which a human can understand the cause of a decision * The higher the explainability of a ML model, the easier it is for someone to comprehend why certain decisions or predictions have been made * A model is more explainable than another model if its decisions are easier for a human to comprehend than decisions from the other model --- ## Explainability is Essential for ... * Model debugging - Why did my model make this mistake? * Feature engineering - How can I improve my model? * Detecting fairness issues - Does my model discriminate? * Human-AI cooperation - How can I understand and trust the model's decisions? * High-risk applications - Healthcare, finance, judicial, ... * Regulatory compliance - Does my model satisfy legal requirements? --- ## Glass-box vs. Black-box AI/ML Models * Glass-box models are the ones that intrinsically permit humans, at least the experts in the domain, to understand how a prediction was made * Black-box models, on the other hand, are extremely hard to explain and can hardly be understood even by domain experts * Aside from certain simple models (regressions, decision trees, etc.), most AI/ML models are black-box models and are not designed to offer explanations --- ## Taxonomies of XAI Methods * **Intrinsic or post hoc?** * This criteria distinguishes whether interpretability is achieved by restricting the complexity of the ML model (intrinsic) or by applying methods that analyze the model after training (post hoc). * **Model-specific or model-agnostic?** * Model-specific interpretation tools are limited to specific model classes, e.g., [xgboostExplainer](https://github.com/AppliedDataSciencePartners/xgboostExplainer), [randomForestExplainer](https://github.com/ModelOriented/randomForestExplainer), and [DeepLIFT](https://arxiv.org/abs/1704.02685) * Model-agnostic tools can be used on any ML model and are applied after the model has been trained (post hoc). They usually work by analyzing feature input and output pairs. * **Local or global?** * Does the interpretation method explain an individual prediction or the entire model behavior? --- # The `mlr3` Package for ML Workflow `mlr3` is a package for efficient, object-oriented programming on the ML building blocks. * Website: https://mlr3.mlr-org.com/index.html * Book: https://mlr3book.mlr-org.com/ * Reference manual: https://mlr3.mlr-org.com/reference/ Modular design: * Tasks: Stores data and metadata * Learners: ML algorithms ([table of all learners](https://mlr-org.com/learners.html)) * Resamplings: Define partitioning of task into train and test sets * Measures: Performance measures --- # German Credit Risk Dataset As a running example for this session, we will apply an ML method on the German Credit Risk dataset, which contains 1000 observations (700 good loans, 300 bad loans) and [21 variables](https://search.r-project.org/CRAN/refmans/fairml/html/german.credit.html). ```r library(mlr3verse) library(mlr3extralearners) tsk_german = tsk("german_credit") tsk_german ``` ``` ## <TaskClassif:german_credit> (1000 x 21): German Credit ## * Target: credit_risk ## * Properties: twoclass ## * Features (20): ## - fct (14): credit_history, employment_duration, foreign_worker, ## housing, job, other_debtors, other_installment_plans, ## people_liable, personal_status_sex, property, purpose, savings, ## status, telephone ## - int (3): age, amount, duration ## - ord (3): installment_rate, number_credits, present_residence ``` --- In the code below, we: * Select half of features from the task for modeling * Split the row ids of the task into a training set and a test set * Specify a [Gradient Boosting Machine (GBM)](http://uc-r.github.io/gbm_regression) learner * An ensemble of tree-based models * Boosting: adding new (weak) models to the ensemble *sequentially* to address errors in the previous round * In practice, we would fine-tune some key GBM parameters (e.g., number of trees and depth of trees), but we omit that step for now * Train the learner ```r tsk_german = tsk_german$select( cols = c("duration", "amount", "age", "status", "savings", "purpose", "credit_history", "property", "employment_duration", "other_debtors")) split = partition(tsk_german) lrn_gbm = lrn("classif.gbm", predict_type = "prob") lrn_gbm$train(tsk_german, row_ids = split$train) ``` ``` ## Distribution not specified, assuming bernoulli ... ``` --- # The `iml` Package * `iml` (Molnar, Bischl, and Casalicchio 2018) implements a unified interface for a variety of model-agnostic interpretation methods that facilitate the analysis and interpretation of machine learning models. * All `mlr3` models are supported by wrapping learners in an `Predictor` object * The `Predictor` object is constructed from our trained learner & heldout test data: ```r library(iml) # features in test data credit_x = tsk_german$data(rows = split$test, cols = tsk_german$feature_names) # target in test data credit_y = tsk_german$data(rows = split$test, cols = tsk_german$target_names) *predictor = Predictor$new(lrn_gbm, data = credit_x, y = credit_y) ``` .footnote[ <hr> * Molnar, Christoph, Bernd Bischl, and Giuseppe Casalicchio. 2018. "iml: An R Package for Interpretable Machine Learning." JOSS 3 (26): 786. https://doi.org/10.21105/joss.00786. ] --- ## Feature Importance * When deploying a model in practice, it is often of interest to know which features contribute the most to the predictive performance of the model. * A very popular feature importance method is the .blue[**permutation feature importance (PFI)**] * Originally introduced by Breiman (2001) for random forests * Adapted by Fisher et al. (2019) as a model-agnostic feature importance measure * Intuition of PFI: If a variable is important, then we expect that, after permuting the values of the variable, the model's performance will worsen. The larger the change in the performance, the more important is the variable. .footnote[ <hr> * Breiman, Leo. 2001. "Random Forests." Machine Learning 45: 5-32. https://doi.org/10.1023/A:1010933404324. * Fisher, Aaron, Cynthia Rudin, and Francesca Dominici. "All models are wrong, but many are useful: Learning a variable's importance by studying an entire class of prediction models simultaneously." Journal of Machine Learning Research 20, no. 177 (2019): 1-81. ] --- ```r importance = FeatureImp$new( predictor, n.repetitions = 50, loss = "ce") # cross-entropy loss importance$plot() ``` <!-- --> By default, `FeatureImp` calculates the ratio of the model performance before and after permutation as an importance value --- The difference of the performance measures can be returned by passing `compare = "difference"` when calling `$new()` ```r importance = FeatureImp$new(predictor, n.repetitions = 50, loss = "ce", * compare = "difference") importance$plot() ``` <!-- --> --- ## Feature Effects * Feature effect methods describe how a feature contributes towards the model predictions by analyzing how the predictions change when changing a feature. * There are **local** and **global** feature effect methods. * Global: how predictions change on average when a feature is changed * Local: how a single prediction of a given observation changes when a feature value is changed --- Two popular feature effects methods: * .red[Partial dependence (PD)] plots (Friedman 2001) can be used to visualize **global** feature effects by visualizing how model predictions change on average when varying the values of a given feature of interest. * .red[Individual conditional expectation (ICE)] curves (Goldstein et al. 2015) (a.k.a. Ceteris Paribus plots) are a **local** feature effects method that display how the prediction of a single observation changes when varying a feature of interest, while all other features stay constant. .footnote[ <hr> * Friedman, Jerome H. 2001. "Greedy Function Approximation: A Gradient Boosting Machine." The Annals of Statistics 29 (5). https://doi.org/10.1214/aos/1013203451. * Goldstein, Alex, Adam Kapelner, Justin Bleich, and Emil Pitkin. 2015. "Peeking Inside the Black Box: Visualizing Statistical Learning with Plots of Individual Conditional Expectation." Journal of Computational and Graphical Statistics 24 (1): 44-65. https://doi.org/10.1080/10618600.2014.907095. ] --- ```r effect = FeatureEffect$new(predictor, feature = "amount", * method = "pdp+ice") effect$plot() ``` <!-- --> Partial dependence (PD) plot (yellow) and individual conditional expectation (ICE) curves (black) that show how the credit amount affects the predicted credit risk. --- ## Surrogate Models * Interpretable models such as decision trees or linear models can be used as surrogate models to approximate or mimic an, often very complex, black box model. * Inspecting the surrogate model can provide insights into the behavior of a black box model, for example by looking at the model coefficients in a linear regression or splits in a decision tree. * We can differentiate between **local** surrogate models, which approximate a model locally around a specific data point of interest, and **global** surrogate models which approximate the model across the entire input space. --- ### Global Surrogate Model: TreeSurrogate ```r tree_surrogate = TreeSurrogate$new(predictor, maxdepth = 2L) ``` Before inspecting this model, we can check if the surrogate model approximates the prediction model accurately: ```r pred_surrogate = tree_surrogate$predict(credit_x, type = "class")$.class pred_surrogate = factor(pred_surrogate, levels = c("good", "bad")) pred_gbm = lrn_gbm$predict_newdata(credit_x)$response confusion = mlr3measures::confusion_matrix(pred_surrogate, pred_gbm, positive = "good") confusion ``` ``` ## truth ## response good bad ## good 269 4 ## bad 38 19 ## acc : 0.8727; ce : 0.1273; dor : 33.6250; f1 : 0.9276 ## fdr : 0.0147; fnr : 0.1238; fomr: 0.6667; fpr : 0.1739 ## mcc : 0.4731; npv : 0.3333; ppv : 0.9853; tnr : 0.8261 ## tpr : 0.8762 ``` --- .left-column-6[ The plot below shows the distribution of the predicted outcomes for each terminal node identified by the tree surrogate. ```r tree_surrogate$plot() ``` <!-- --> ] .right-column-4[ The top two nodes consist of applications with a positive balance in the account (`status` is either `"0 <= ... < 200 DM"`, `"... >= 200 DM"` or `"salary for at least 1 year"`) and either a duration of less or equal than 42 months (top left), or more than 42 months (top right). The bottom nodes contain applicants that either have no checking account or a negative balance (`status`) and either a duration of less than or equal to 36 months (bottom left) or more than 36 months (bottom right). ] --- Or we could access the trained tree surrogate via the `$tree` field of the `TreeSurrogate` object and then print them use `partykit::print.party`: ```r partykit::print.party(tree_surrogate$tree) ``` ``` ## [1] root ## | [2] status in no checking account, ... < 0 DM ## | | [3] duration <= 36: * ## | | [4] duration > 36: * ## | [5] status in 0<= ... < 200 DM, ... >= 200 DM / salary for at least 1 year ## | | [6] duration <= 42: * ## | | [7] duration > 42: * ``` --- ### Local Surrogate Model * Local surrogate models allow us to interpret model behavior for one specific individual. * To illustrate this, we will select a data point to explain. * As we are dealing with people, we will name our observation "Charlie" and first look at the black box predictions: ```r Charlie = credit_x[35, ] gbm_predict = predictor$predict(Charlie) gbm_predict ``` ``` ## good bad ## 1 0.6345884 0.3654116 ``` The model predicts Charlie has 63.5% probability in the class 'good'. --- * The underlying surrogate model is a locally weighted LASSO * The [implementation](https://christophm.github.io/iml/reference/LocalModel.html) is very similar to LIME (Ribeiro et al. 2016) * Key parameters in the local surrogate model: * `k`: a pre-defined number of features per class, `k` (default is 3), will have a non-zero coefficient and as such are the `k` most influential features, below we set `k = 2`. * `gower.power`: the size of the neighborhood for the local model (default is `gower.power = 1`). The smaller the value, the more the model will focus on points closer to the point of interest, below we set `gower.power = 0.1`. ```r predictor$class = "good" # explain the 'good' class local_surrogate = LocalModel$new( predictor, Charlie, * gower.power = 0.1, k = 2) ``` .footnote[ <hr> * Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "" Why should i trust you?" Explaining the predictions of any classifier." In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp. 1135-1144. 2016. ] --- ```r rownames(local_surrogate$results) = NULL local_surrogate$results[, c("feature.value", "effect")] ``` ``` ## feature.value effect ## 1 duration=12 -0.01999932 ## 2 status=no checking account -0.08544408 ``` This means that we have a linear regression model as a proxy/surrogate for the GBM model: $$ \hat p_{credit risk=good} = intercept + \beta_1 \times x_1 + \beta_2 \times x_2 + \epsilon $$ where * `\(\beta_1 \times x_1 = -0.01999932\)` * `\(\beta_2 \times x_2 = -0.08544408\)` --- ### Shapley Values * Shapley values were originally developed in the context of cooperative game theory to study how the payout of a game can be fairly distributed among the players that form a team. * This concept has been adapted for use in ML as a local interpretation method to explain the contributions of each input feature to the final model prediction of a single observation. * The 'players' are the features * Shapley values estimate how much each input feature contributed to the final prediction for a single observation (after subtracting the mean prediction). * The Shapley value of a feature is calculated by considering all possible subsets of features and computing the difference in the model prediction with and without the feature of interest included. --- * The exact computation of Shapley values is time consuming, as it involves taking into account all possible combinations of features to calculate the marginal contribution of a feature. * The estimation of Shapley values (phi) is often approximated. * The exact computation would involve taking into account all possible combinations of features to calculate the marginal contribution of a feature. * The `sample.size` represents the number of times coalitions/marginals are sampled. * This argument can be increased to obtain a more accurate approximation of exact Shapley values. ```r shapley = Shapley$new( predictor, x.interest = Charlie, sample.size = 200) # default sample.size = 100 ``` --- ```r shapley$plot() # phi is the Shapley value ``` <!-- --> * The actual prediction (0.63) displays the original model prediction for Charlie; the average prediction (0.71) displays the average prediction over the sampled coalitions/marginals. * The Shapley value (phi) of each feature tell us how the feature could shift the predicted probability of being creditworthy (i.e., `credit_risk` = `good`). --- # The `counterfactuals` Package * .red[**Counterfactual explanations**] try to identify the smallest possible changes to the input features of a given observation that would lead to a different prediction (Wachter, Mittelstadt, and Russell 2017). * In other words, a counterfactual explanation provides an answer to the question: .blue["What changes in the current feature values are necessary to achieve a different prediction?"] * Counterfactual explanations can have many applications in different areas such as healthcare, finance, and criminal justice, where it may be important to understand how small changes in input features could affect the model's prediction. * E.g., to suggest lifestyle changes to a patient to reduce their risk of developing a particular disease, or to suggest actions that would increase the chance of a credit being approved. .footnote[ <hr> * Wachter, Sandra, Brent Mittelstadt, and Chris Russell. "Counterfactual explanations without opening the black box: Automated decisions and the GDPR." Harvard Journal of Law & Technology. 31 (2017): 841. ] --- * A simple counterfactual method is the .red[**What-If method**] where, for a given prediction to explain, the counterfactual is the closest data point in the dataset with the desired prediction. * Usually, many possible counterfactual data points can exist, but early counterfactual methods only produce a single, somewhat arbitrary counterfactual explanation. * In contrast, the .red[**multi-objective counterfactuals method (MOC)**] (Dandl et al. 2020) generates multiple artificially-generated counterfactuals that may not be equal to observations in a given dataset. .footnote[ <hr> * Dandl, Susanne, Christoph Molnar, Martin Binder, and Bernd Bischl. 2020. "Multi-Objective Counterfactual Explanations." In Parallel Problem Solving from Nature PPSN XVI, 448-69. Springer International Publishing. https://doi.org/10.1007/978-3-030-58112-1_31. ] --- ## What-If Method * Our model previously predicted Charlie as having good credit with a probability of **63.5%**. * We can use the What-If method to understand .blue[_how the features need to change for this predicted probability to increase to **75%**_]. ```r library(counterfactuals) whatif = WhatIfClassif$new(predictor, n_counterfactuals = 1L) cfe = whatif$find_counterfactuals(Charlie, desired_class = "good", desired_prob = c(0.75, 1)) data.frame(cfe$evaluate(show_diff = TRUE)) ``` ``` ## age amount credit_history duration employment_duration other_debtors property purpose savings status dist_x_interest no_changed dist_train dist_target minimality ## 1 -3 1417 <NA> -3 <NA> <NA> <NA> <NA> <NA> ... < 0 DM 0.1176141 4 0 0 1 ``` .smaller[Here we can see that, to achieve a predicted probability of at least 75% for good credit, Charlie would have to be three years younger, the duration of credit would have to be reduced by three months, the amount would have to be increased by 1417 DM (Deutsche Mark) and the status would have to be `'... < 0 DM'` (instead of 'no checking account').] --- ## MOC Method * Calling the multi-objective counterfactuals (MOC) method is similar to the What-If method but with a `MOCClassif()` object. * We set the `epsilon` parameter to 0 to penalize counterfactuals in the optimization process with predictions outside the desired range. * With MOC, we can also prohibit changes in specific features via the `fixed_features` argument, below we restrict changes in the 'age' variable. * For illustrative purposes, we set `n_generations = 30L`, which means that we only run the multi-objective optimizer for 30 generations. ```r moc = MOCClassif$new(predictor, epsilon = 0, n_generations = 30L, fixed_features = "age") cfe_multi = moc$find_counterfactuals(Charlie, desired_class = "good", desired_prob = c(0.75, 1)) ``` --- The multi-objective approach does not guarantee that all counterfactuals have the desired prediction so we use `$subset_to_valid()` to restrict counterfactuals to those we are interested in: ```r cfe_multi$subset_to_valid() cfe_multi ``` .small[ ``` ## 7 Counterfactual(s) ## ## Desired class: good ## Desired predicted probability range: [0.75, 1] ## ## Head: ## age amount credit_history duration employment_duration other_debtors property purpose savings ## <int> <int> <fctr> <int> <fctr> <fctr> <fctr> <fctr> <fctr> ## 1: 40 701 no credits taken/all credits paid back duly 12 1 <= ... < 4 yrs none unknown / no property furniture/equipment 100 <= ... < 500 DM ## 2: 40 701 no credits taken/all credits paid back duly 12 1 <= ... < 4 yrs none unknown / no property furniture/equipment unknown/no savings account ## 3: 40 701 no credits taken/all credits paid back duly 12 1 <= ... < 4 yrs none unknown / no property furniture/equipment 500 <= ... < 1000 DM ## status ## <fctr> ## 1: no checking account ## 2: ... >= 200 DM / salary for at least 1 year ## 3: no checking account ``` ] --- For a concise overview of the required feature changes, we can use the `plot_freq_of_feature_changes()` method, which visualizes the frequency of feature changes across all returned counterfactuals. ```r cfe_multi$plot_freq_of_feature_changes() ``` <!-- --> We can see that `'status'` and `'savings'` were changed most frequently in the counterfactuals. --- To see _how_ the features were changed, we can visualize the counterfactuals for two features on a two-dimensional ICE plot. ```r cfe_multi$plot_surface(feature_names = c("status", "savings")) + theme(axis.text.x = element_text(angle = 15, hjust = .7)) ``` <!-- --> .smaller[In the plot, the colors indicates the predicted value of the model when `'status'` and `'savings'` differ while all other features are set to the true (Charlie's) values. The white point displays the true prediction (Charlie), and the black points are the counterfactuals that only propose changes in the two features.] --- # The `DALEX` Package * `DALEX` (Biecek 2018) implements a similar set of methods as `iml`, but the architecture of `DALEX` is oriented towards model comparison. * `DALEX` also supports local and global XAI methods .footnote[ <hr> * Biecek, Przemyslaw. 2018. "DALEX: Explainers for Complex Predictive Models in R." Journal of Machine Learning Research 19 (84): 1-5. https://jmlr.org/papers/v19/18-416.html. ] --- * For models created with the `mlr3` package, we would use `explain_mlr3()`: ```r library(DALEX) library(DALEXtra) gbm_exp = DALEXtra::explain_mlr3(lrn_gbm, data = credit_x, y = as.numeric(credit_y$credit_risk == "bad"), label = "GBM Credit") ``` ``` ## Preparation of a new explainer is initiated ## -> model label : GBM Credit ## -> data : 330 rows 10 cols ## -> target variable : 330 values ## -> predict function : yhat.LearnerClassif will be used ( default ) ## -> predicted values : No value for predict function target column. ( default ) ## -> model_info : package mlr3 , ver. 0.18.0 , task classification ( default ) ## -> predicted values : numerical, min = 0.02103205 , mean = 0.2938789 , max = 0.8811732 ## -> residual function : difference between y and yhat ( default ) ## -> residuals : numerical, min = -0.8811732 , mean = 0.006121096 , max = 0.9445791 ## A new explainer has been created! ``` --- ## Feature Importance Feature importance methods can be calculated with `model_parts()` and then plotted. ```r gbm_effect = model_parts(gbm_exp, B = 20, type = "difference") gbm_effect ``` ``` ## variable mean_dropout_loss label ## 1 _full_model_ 0.000000000 GBM Credit ## 2 other_debtors 0.002582098 GBM Credit ## 3 property 0.003714636 GBM Credit ## 4 amount 0.005245092 GBM Credit ## 5 age 0.005321614 GBM Credit ## 6 savings 0.007700380 GBM Credit ## 7 employment_duration 0.009736980 GBM Credit ## 8 purpose 0.021327999 GBM Credit ## 9 credit_history 0.023785474 GBM Credit ## 10 duration 0.029521405 GBM Credit ## 11 status 0.093132625 GBM Credit ## 12 _baseline_ 0.262069833 GBM Credit ``` --- ```r plot(gbm_effect) ``` <!-- --> --- ## Feature Effects The global XAI method--partial dependence (PD) plots--can be calculated with `model_profile()`: ```r gbm_profiles = model_profile( gbm_exp, variables = c("age", "amount", "duration")) plot(gbm_profiles) + ggtitle("Partial Dependence for GBM Credit model") ``` <!-- --> --- The local XAI method--individual conditional expectation (ICE) curves (a.k.a. Ceteris Paribus plots)--can be calculated with `predict_profile()`: ```r plot(predict_profile(gbm_exp, credit_x[1:5, ])) ``` <!-- --> --- ### Shapley Values The `predict_parts()` function can also be used to plot Shapley values with the SHAP algorithm (Lundberg and Lee 2017) by setting `type = "shap"`: ```r predict_parts(gbm_exp, new_observation = Charlie, type = "shap", B = 20) |> plot() ``` <!-- --> .footnote[ <hr> * Lundberg, Scott M., and Su-In Lee. "A unified approach to interpreting model predictions." Advances in neural information processing systems 30 (2017). ] --- ### Break-down Plots [**Break-down plots**](https://ema.drwhy.ai/breakDown.html) decompose the model's prediction into contributions that can be attributed to different explanatory variables. These are calculated with `predict_parts()`: ```r predict_parts(gbm_exp, new_observation = Charlie, type = "break_down") |> plot() ``` <!-- --> --- # Conclusions * In this session, we learned how to gain post hoc insights into a model trained with `mlr3` by using the most popular approaches from the field of XAI. * The XAI methods are all model-agnostic and so do not depend on specific model classes. * `iml` and `DALEX` offer a wide range of (partly) overlapping methods, while `counterfactuals` focuses solely on counterfactual methods. * We demonstrated on `tsk("german_credit")` how these packages offer an in-depth analysis of a GBM model fitted with `mlr3`.